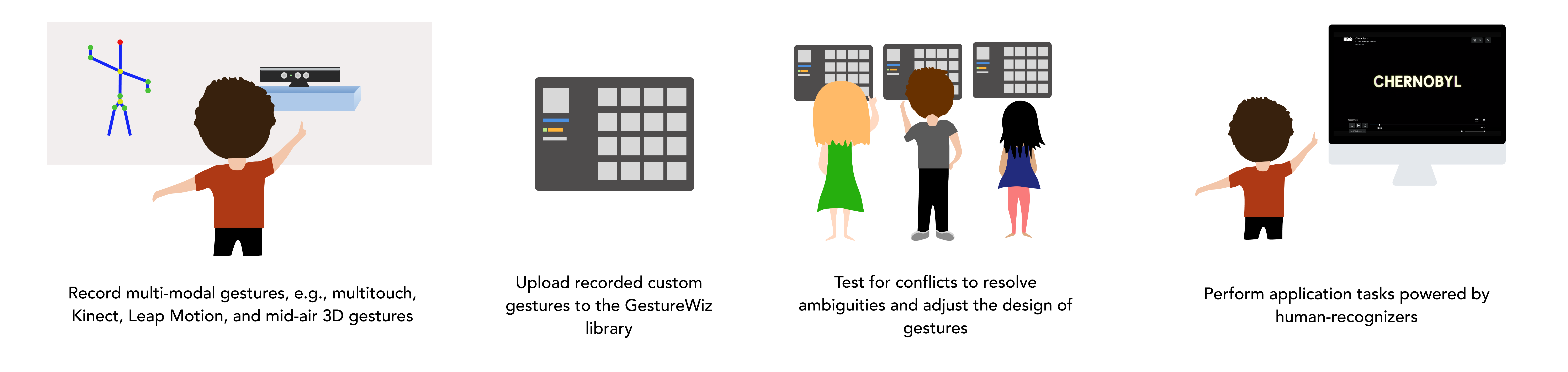

How do we prototype for 3D gestures?

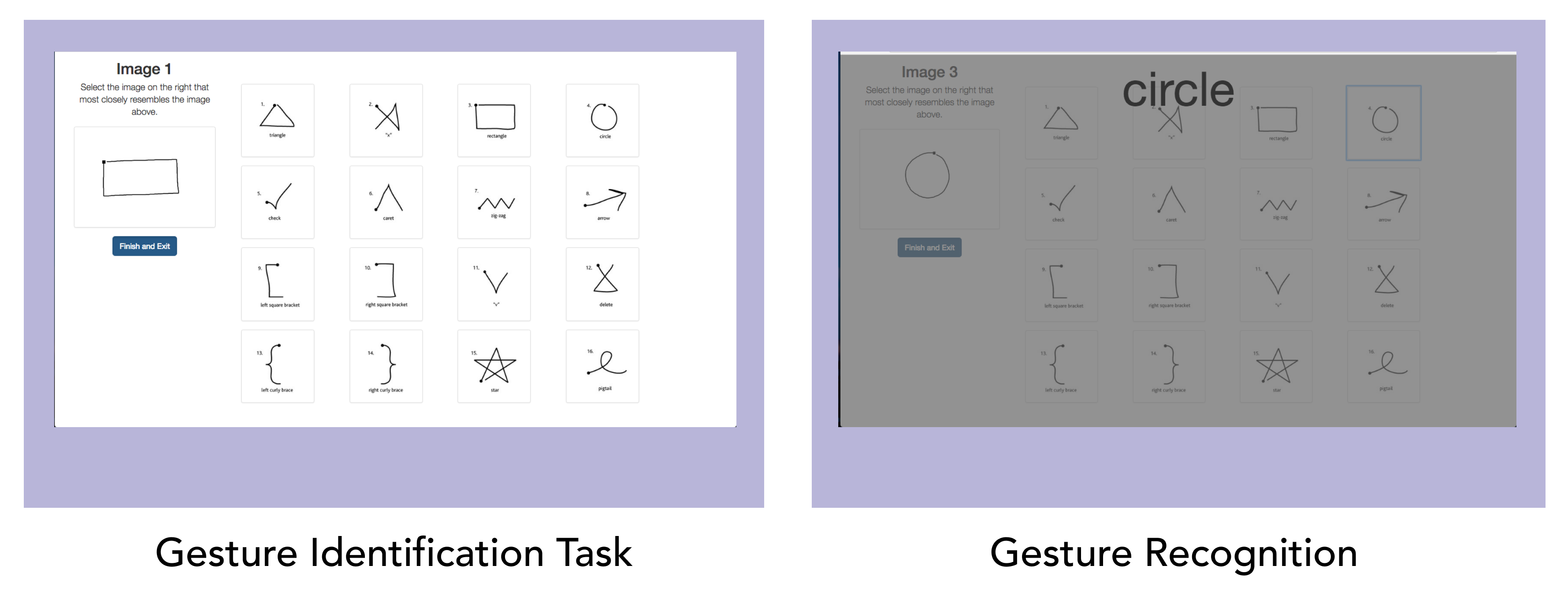

Designers and researchers often rely on simple gesture recognizers like Wobbrock et al.’s $1 for rapid user interface prototypes. They do so because its 16 gestures are well-established, well-studied, and therefore provide a good baseline. Most existing recognizers aren’t sufficient because:

- Limited to a particular input modality

- Have a pre-trained set of gestures

- Cannot be easily combined with other recognizers

Simply put, the process of creating prototypes that employ advanced touch and mid-air gestures still requires significant technical experience and programming skills.