Intelligent context-aware personal assistant powering Samsung's ecosystem of devices from smartphones to televisions offering users multiple ways to interact with their devices through voice, text, or touch.

Designed Bixby's entity extraction framework achieving 95% intent accuracy at <100ms latency for 200M+ users across 300M+ devices

Back in 2014, voice assistants like Siri, Google Assistant and Alexa were command-driven and forgetful. If you asked 'What's the weather?' then followed up with 'What about tomorrow?'— they'd fail. Users would get frustrated if their voice assistant didn't really answer their questions. While the simple ones worked, the complex ones failed as these assistants missed context.

Our goal at Samsung was build a truly conversational assistant that remembered context across multiple turns and domains. This meant understanding users needs and technology limitations in order to create a technical framework that works and implement that into a fully-functioning system.

The system should be able to help with simple tasks such as setting timers or even handle complex tasks like creating a photo album of recently taken pictures on a given day and, sharing it with family and friends.

As a software engineer on Samsung's Bixby team, I built the technical infrastructure to make that possible.

I led and defined the context-awareness framework that enabled the voice assistant to understand natural language and maintain conversation context across multiple domains such news, weather, location, alarms, and more. In order to do so, my work involved collaborating with teams across design, strategy and engineering.

Conducted technical evaluations of user interaction patterns to inform NLU model design. Defined a framework for context -- interaction patterns, sentence construction and discourse paths. Evaluated 3rd-party content providers and defined technical integration requirements

Designed and implemented the context-awareness framework connecting NLU, ASR, and domain modules across 8 languages. Built and maintained core NLP backend infrastructure supporting 10+ domains (news, weather, location, health, media, etc.).

Created an Android prototype and demo server integrating NLU/ASR models with REST APIs. Achieved 95% intent classification accuracy at <100ms latency serving 10M+ users during beta

Partnered with multiple stakeholders across engineering, design, strategy, product and 3P vendors. Presented the concept vision to 800+ employees at an internal conference

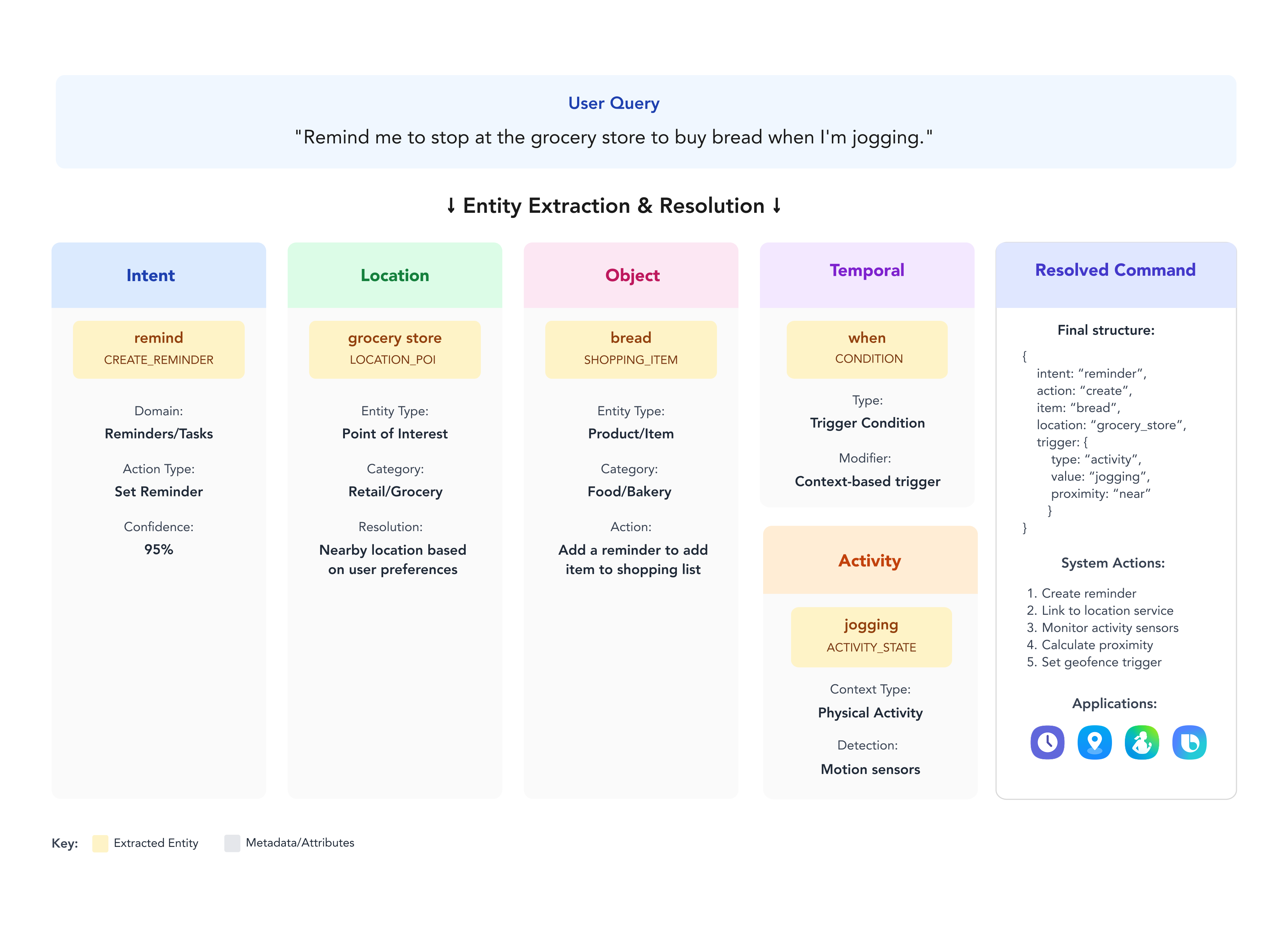

Let's look at how a machine understands you and see what the entity resolution mapping for the following example looks like:

There are several things at play here. This task that seems so simple for a user needs multiple applications to work together. This task needs to invoke the native Clock, Maps and Samsung Health applications.

While Clock is a system application, Maps and Samsung Health are cloud-based applications that need to make calls to the backend. The backend has to fetch these responses from multiple applications and then create a response and speak in a language that a user can understand. All this needs to be done in a very short period of time.

When all these work seamlessly, it seems magical!

The right most column shows how all these entities get structured into a machine-readable format that the system can act on - creating the reminder, linking location services, monitoring activity sensors, and setting up geofence triggers.

Why is it hard. Context matters. Period.

There are several factors that come into play when we talk about conversational intelligent assistants. One such factor is location. It matters where you are located -- Seoul or San Francisco or Bangalore or London -- as the response may or may not be relevant for you.

Other context-awareness factors include timezones, languages and locales that affect local info such as news, weather, restaurant reservations.

Users don't say "Hey Bixby, using the Weather domain, what's the temperature?" They switch between domains mid-conversation. We had to build a context manager that tracked conversation history and routed queries to the right domain.

A single query like "Wake me up at 6am when I'm jogging" requires coordinating Clock (system), Maps (cloud), and Health (cloud) services. We built an orchestration layer that parallelized API calls and handled failures gracefully.

Voice interactions feel broken above 100ms. We optimized our NLU pipeline to classify intents and extract entities in under 100ms while maintaining 95% accuracy.

The product was unveiled in March 2017 at the Samsung Galaxy S8 Unpacked event and officially rolled out worldwide in July 2017. The context-awareness framework I helped build became foundational to Bixby's success:

Bixby users (as of Nov 2022)

Bixby enabled devices

intent classification accuracy serving 10M+ users

latency

Building Bixby's context-awareness system gave me a deep understanding of conversational AI's technical constraints—latency budgets, model accuracy trade-offs, multi-domain orchestration.

When I transitioned to product design, this engineering background became my superpower. I design conversations knowing exactly what's technically feasible, how much latency each interaction adds, and where ML models will struggle. This makes me a better designer for AI products.